What Do Amazon Reviews and Reddit Threads Reveal About Why Educational Toys Fail?

Turning Negative Feedback into R&D Gold: A Data-Driven Guide for Educational Toys

Every year, millions of dollars are poured into developing educational toys that end up gathering dust in closets or, worse, flooding Amazon with one-star reviews and Reddit with parental frustration threads. Behind these commercial disappointments often lies a critical disconnect: the gap between what designers think children and parents need and what they actually experience in daily use. However, buried within this sea of negative feedback lies an invaluable, real-world R&D lab. By systematically analyzing product reviews, forum discussions, and social media sentiment, toy companies can decode the precise reasons for failure and transform them into a competitive advantage. This isn't about damage control—it's about using failure as the most honest form of market research.

This guide explores how to mine unstructured data from Amazon reviews and platforms like Reddit to uncover the root causes of educational toy failures. We’ll move beyond star ratings to perform sentiment and thematic analysis, identify recurring failure patterns (from durability issues to educational value gaps), and translate these insights into actionable R&D priorities. You'll learn how to distinguish genuine product flaws from isolated complaints, set up a continuous feedback monitoring system, and build products that succeed because they've already learned from others' mistakes.

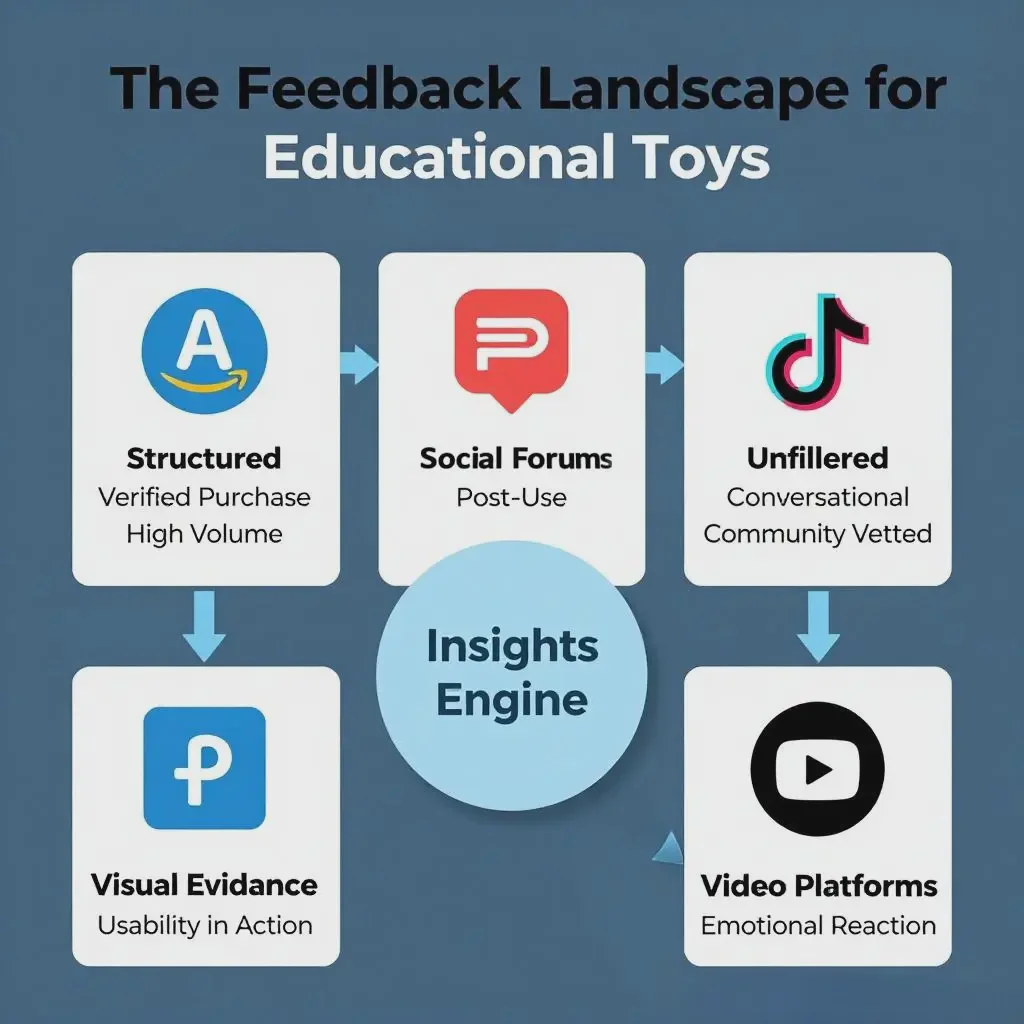

To harness the power of this feedback, we must first know where to look and how to listen. The digital landscape is vast, but specific platforms have become the de facto public forums for unfiltered, post-purchase truth-telling. Let's start by mapping these critical data sources.

Mining for Truth: Where to Find the Most Honest Feedback on Educational Toys?

The first step in data-driven R&D is sourcing high-quality, unbiased data. While formal surveys and focus groups have their place, the most candid insights often emerge spontaneously after purchase, when users have no incentive to please the company. Two primary sources offer a goldmine of this unfiltered feedback: the structured but public world of e-commerce reviews and the sprawling, conversational universe of social forums.

Amazon Reviews provide structured, verified-purchase data at scale, ideal for quantitative analysis of recurring issues. Reddit and Niche Forums (like r/ScienceBasedParenting, r/Teachers, or specific parenting boards) offer qualitative depth, detailed problem narratives, and discussions about unmet needs. YouTube "Unboxing" and "Play Test" Videos visually demonstrate usability failures that text reviews might miss.

The key is to analyze these sources for different intelligence. Amazon’s verified purchase badge and the ability to filter by star rating allow you to isolate the most critical feedback from actual buyers. Look for reviews that include photos or videos—they are gold for diagnosing physical defects. On Reddit, the value lies in the thread. A single complaint post might be an outlier, but if it sparks a chain of "Me too!" replies or detailed troubleshooting discussions, you've identified a systemic issue. Forums dedicated to specific educational philosophies (Montessori, Waldorf, STEM) are particularly valuable for assessing whether your toy aligns with core pedagogical expectations. Remember, you're not just collecting complaints; you're gathering evidence of the gap between design intent and real-world use.

Beyond the Star Rating: What Patterns Signal a Fundamental Design Flaw?

A one-star review is a signal, but a cluster of reviews mentioning the same specific problem is a diagnosis. Moving from raw data to actionable insight requires pattern recognition. By categorizing and quantifying feedback, you can distinguish a random manufacturing defect from a catastrophic design failure that will doom your product in the market.

Recurring critical patterns include: Durability Failures (breaks within weeks/months), Usability Issues (overly complex assembly, unclear instructions for child or parent), Educational Value Gaps ("my child lost interest in 10 minutes," "teaches nothing as advertised"), Safety Concerns (small parts, sharp edges, chemical smells), and Age Appropriateness Mismatches (too advanced or too simple). The frequency and emotional intensity of these themes are more telling than the average star rating.

To perform this analysis, use text mining and Natural Language Processing (NLP) techniques. Simple keyword searches can surface volume ("break," "snap," "crack*" for durability). More advanced sentiment analysis can detect frustration levels. Look for themes in 4 and 5-star reviews too—these often contain "soft complaints" or wish-list items that hint at the next iteration's features. A powerful technique is competitive benchmarking: run the same analysis on your competitors' top negative reviews. If they all fail on durability but your reviews highlight educational value gaps, you know where to double down and where to urgently fix. This pattern analysis transforms anecdotal complaints into a prioritized failure mode map, showing you not just that your product has problems, but which problems are most systemic and damaging to your brand promise.

From Complaint to Blueprint: How to Prioritize and Action Feedback in R&D?

Identifying failure patterns is only half the battle. The real challenge for R&D and product managers is deciding what to fix first with limited resources. Not all negative feedback is created equal. A systematic framework is needed to triage issues based on their impact on user experience, alignment with brand promise, and feasibility of correction.

Use a Feedback Prioritization Matrix. Plot issues on two axes: Impact (How many users are affected? How severely does it undermine core function?) and Effort (Cost, time, and complexity to fix). High-Impact, Low-Effort issues are "Quick Wins." High-Impact, High-Effort issues are "Major Projects." Low-Impact issues are parked or rejected. Always prioritize issues related to Safety and Core Function Failure above all else.

The translation into R&D action requires specificity. Instead of a vague directive like "improve durability," the feedback analysis should produce an Engineering Change Order (ECO) with context. For example: "Redesign hinge 'A' on component 'B'. Failure mode: plastic fatigue fracture after ~50 openings (per Reviews). User consequence: renders the main feature unusable (High Impact). Suggested approach: increase wall thickness to 2mm and switch to polypropylene blend (Medium Effort)." This data-backed specificity prevents endless debate and accelerates iteration. Furthermore, involve the feedback in the prototyping and testing phase. If reviews said an assembly was too hard for parents, include that task in your next parent-user test and measure the time and frustration level. This closes the loop, ensuring the "fix" actually solves the real-world problem identified in the data.

Building a Feedback Loop: How to Institutionalize Data-Driven Design?

For data-driven R&D to move from a one-off project to a core competency, it must be embedded into the organization's workflow. This means creating a systematic, ongoing process—a feedback loop—that continuously pulls insights from the market and pushes informed improvements back into development. This transforms reactive firefighting into proactive innovation.

Establish a Continuous Feedback Monitoring System. This involves: 1) Automated Data Collection (tools to scrape/API reviews and forum mentions), 2) Regular Analysis Cadence (weekly digests, quarterly deep-dives), 3) Cross-functional Review (R&D, Marketing, Customer Service meet to discuss insights), and 4) Closed-Loop Tracking (linking product changes back to specific feedback and monitoring for sentiment improvement). The goal is to make user voice a regular agenda item.

Institutionalizing this process requires both tools and culture. Invest in a social listening or review analytics platform (e.g., Brandwatch, ReviewTrackers) that can handle large volumes and provide alerts. More importantly, foster a company culture that values critical feedback as an asset, not a threat. Celebrate when a team identifies a failure pattern early, preventing a larger launch disaster. Share positive case studies where data-driven changes led to measurable review score improvements. Finally, consider proactive engagement. When you identify a thoughtful critic on Reddit, a respectful response or invitation to a beta test group can turn a detractor into a valuable co-creator. This loop doesn't just improve existing products; it generates ideas for entirely new ones by highlighting unmet needs and frustrations with the entire category, positioning your company not just to fix things, but to lead the market.

Conclusion

In the competitive landscape of educational toys, success is no longer just about creative concepts and attractive packaging; it's about resilience, learning, and adaptation. The candid, often brutal, feedback found in Amazon reviews and Reddit threads is not a sign of market rejection but an open-source textbook on real-world product performance. Companies that learn to read this textbook systematically gain an unparalleled advantage.

By moving beyond defensive postures and embracing these data streams, R&D transforms from an isolated, intuition-driven department into the central nervous system of customer-centric innovation. The patterns of failure reveal the precise boundaries of design assumptions, the true stresses of child's play, and the exact expectations of discerning parents and educators. Prioritizing and acting on these insights allows you to allocate resources where they will have the greatest impact on user satisfaction and brand loyalty.

Ultimately, building a data-driven feedback loop is an investment in longevity. It creates products that earn not just sales, but trust and advocacy. It fosters a culture of humility and continuous improvement that is essential in a market where today's hot toy is tomorrow's lesson learned. The companies that will thrive are those that listen hardest to their critics, for in those voices lies the clear, uncompromising roadmap to what the market truly wants and needs.